AL is a niche language used exclusively for Microsoft Dynamics 365 Business Central, meaning it likely has limited representation in LLM training data. Despite this, I wanted to test whether AI models could handle real-world AL development tasks and how their output compared to a junior-mid AL developer’s work. I evaluated three different models:

- Claude 3.5 Sonnet (available for free but with limitations, otherwise the cost is approx $20/month)

- Deepseek R1 (free)

- OpenAI O3-mini-high (paid, $20/month)

How I Designed the Test

To evaluate LLMs in a measurable way, I created a structured prompt that reflected typical AL development tasks. Below is the prompt used.

Implementing Customer Feedback Management in AL for Business Central.

You are an expert in Microsoft Dynamics 365 Business Central and AL development. Your task is to design and implement a customer feedback management system for orders.

The implementation should include:

- Creating a new “Customer Feedback” table and page. Define an AL table “Customer Feedback” (ID: 50100) with the following fields:

- “Feedback ID” (Primary Key, Integer, AutoIncrement)

- “Customer No.” (Code[20], Lookup on Customer)

- “Order No.” (Code[20], Lookup on Sales Header)

- “Rating” (Integer, values from 1 to 5)

- “Comments” (Text[250])

- “Feedback Date” (Date, default TODAY)

- Create a List Page called “Customer Feedback List” for users to enter and view feedback.

- Extending the “Sales Header” table. Add a FlowField Boolean field “Has Feedback” that is true if an order has a corresponding feedback entry and false otherwise. Modify the “Sales Order” page by adding the “Has Feedback” field after the “Work Description” field. Ensure the field is read-only (not editable).

- Implementing an Event Subscriber for feedback notification. Implement an Event Subscriber for OnAfterPostSalesDoc. When an order is invoiced, check if feedback exists in the “Customer Feedback” table. If no feedback exists, display a notification alerting the user that the invoiced order has no associated feedback.

- Creating an API page for feedback. Develop an API called “Customer Feedback API” with the following capabilities:

- GET: Retrieve feedback data

- POST: Submit new feedback

- Filter options: Allow filtering by customer or rating (e.g., ?rating=5 returns only 5-star feedback)

- Creating a report for customer feedback summary. Develop an AL report named “Customer Feedback Summary” displaying:

- “Customer No.”

- “Customer Name”

- “Total Feedback Count”

- “Average Rating”

The report should support date range filtering for analyzing feedback over a specific period.

- Implementing a Codeunit for automated email reminders. Develop a Codeunit called “Customer Feedback Reminder” that performs the following:

- Identify orders without feedback

- Retrieve the customer’s email from the “Customer” table

- Send an automated email requesting feedback submission

Expected output: AL code snippets for each step, clear explanations of implementation choices, and best practices for Business Central development. Provide the implementation in a structured and professional format.

The test included the following tasks:

- Creating a new table

- Creating a new page

- Extending a standard table

- Extending a standard page

- Implementing an Event Subscriber with logic

- Generating a report (without layout)

- Creating a new API

- Writing a procedure for sending emails

Each model was evaluated with a maximum of 5 points per task.

- Each compilation or logical error resulted in a one-point deduction.

- If the generated object was completely non-functional or the logic was entirely incorrect, the task received 0 points.

- A fully functional implementation received the maximum points.

At this stage, I have not evaluated other factors, such as performance or execution efficiency.

Result

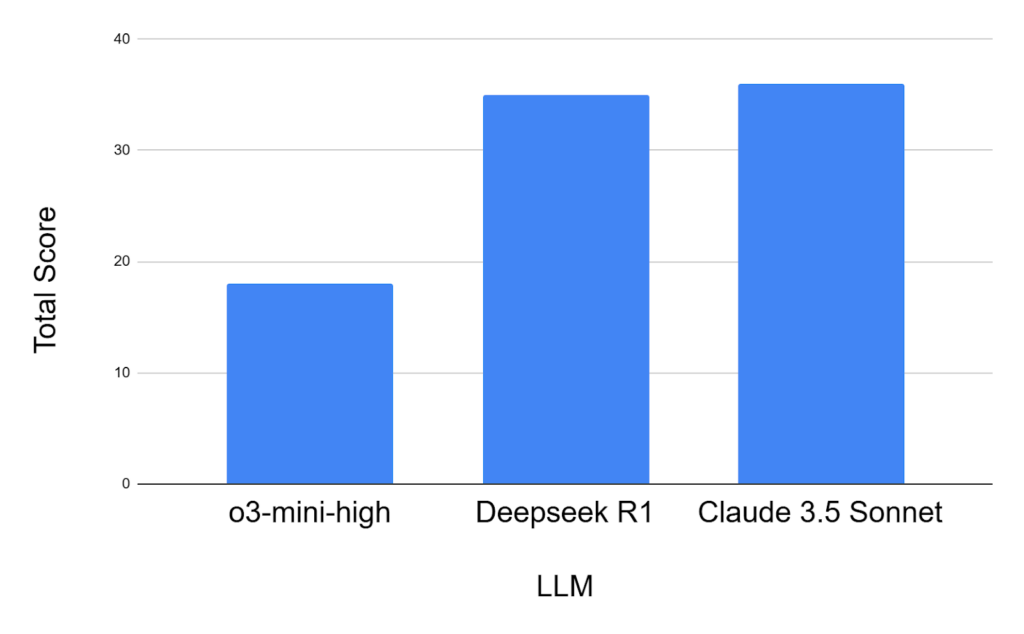

Claude 3.5 Sonnet was the best performer, generating fully compiling code with only minor logical issues that would not require an advanced developer to fix. Deepseek R1 performed similarly well, producing well-structured output with minimal errors. OpenAI o3-mini-high was the most disappointing, struggling with both compilation and logic issues, despite being the only paid model.

| LLM | o3-mini-high | Deepseek R1 | Claude 3.5 Sonnet |

| Table creation | 4 | 5 | 4 |

| Page creation | 3 | 5 | 5 |

| Tableext. creation | 2 | 4 | 5 |

| Pageext. creation | 3 | 3 | 5 |

| Page API creation | 1 | 5 | 5 |

| Report creation | 2 | 3 | 4 |

| Subscriber logic creation | 3 | 5 | 5 |

| Codeunit creation | 0 | 5 | 3 |

| Total | 18 | 35 | 36 |

Below are the results in .txt format, showcasing the responses generated by each model.

Final Thoughts

If your goal is just to generate AL code, you can probably skip the $20/month subscription to OpenAI. Deepseek R1 and Claude 3.5 Sonnet perform just as well—if not better—than OpenAI’s model for this specific task. However, keep in mind that the free version of Claude 3.5 Sonnet is very limited, so a paid subscription would be necessary to use it as a daily development tool.

The quality of the generated code is similar to what you would expect from a junior-mid AL developer or someone with years of experience but lacking deep expertise in the language. This suggests that LLMs can already have a role to play in AL development.

So, will AI replace developers? It’s hard to say, but for now, developers with strong programming expertise and deep specialization are unlikely to be replaced. The key is to develop advanced skills and deep knowledge in specific areas, as AI is more likely to automate repetitive or lower-level tasks rather than replace highly skilled professionals. The real risk is for developers who merely follow instructions without understanding what’s happening under the hood. Meanwhile, in countries like Italy, where the demand for developers far exceeds supply, LLMs could help fill the gap. Rather than worrying about AI replacing jobs, companies should focus on leveraging these tools to boost productivity, ultimately creating greater value and driving economic growth.